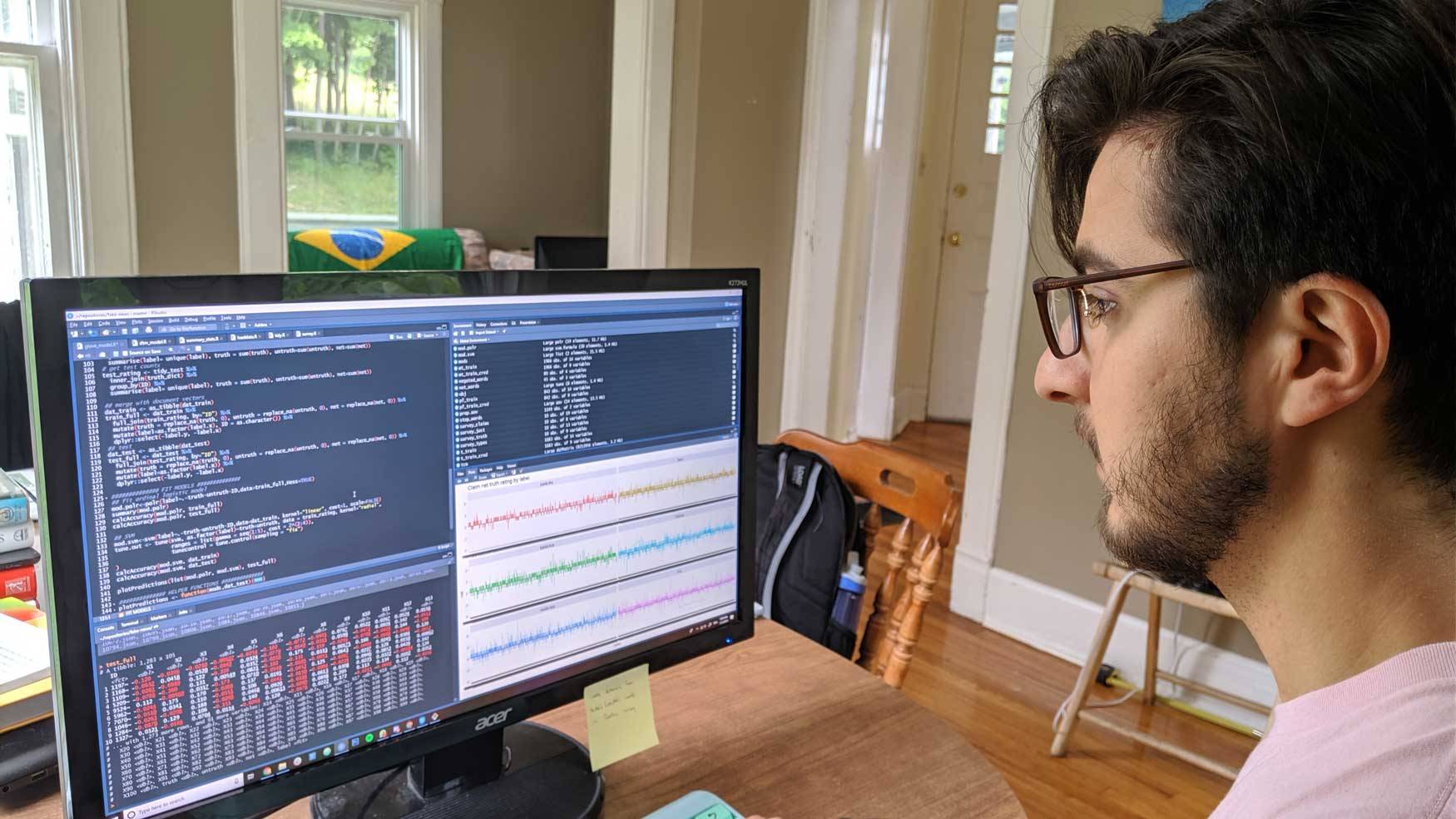

During the summer, Colgate students are applying their liberal arts know-how in a variety of real-world settings, and they are keeping our community posted on their progress. Computer science major Caio Rodrigues Faria Brighenti ’20, from São Paulo, Brazil, describes his research on fake news.

This summer, I am conducting research on fake news, or intentionally misleading information. I intend to learn more about the nature of fake news and then create a program to help people spot it.

Studies show that fake news spreads faster online than real news. Given the threat that fake news poses to our society, artificial intelligence researchers around the world have been designing computer models that can automatically assess whether a given piece of information is an accurate report. This form of automated fact-checking can go a long way toward identifying and eliminating fake news before it can spread.

However, using complicated models for this task has downsides. Despite being highly accurate, these models are almost always unable to communicate why a given piece of information was classified as true or false. In other words, we can’t know for certain what led to that prediction.

I am not approaching fake news detection with the sole objective of creating a state-of-the-art model with the highest accuracy possible. Instead, I am working toward an interpretable approach. While my final model might not be quite as precise as those at the forefront of the field, it will offer more learning opportunities regarding the properties of fake news.

I began my project by conducting a survey on fake news detection. This survey presented respondents with several different claims and articles, asking them to judge whether the claims seemed truthful. The objective here was to access the core of intuitive fake news detection. If I can better understand how people assess validity, I can make smarter decisions about what information to incorporate in my model.

Currently, I am organizing my survey data and my dataset of more than 15,000 short claims labeled by PolitiFact experts. PolitiFact is a fact-checking website that rates the accuracy of claims by elected officials and others on its Truth-O-Meter. Working with text data of such magnitude has been a fascinating challenge for me; I’ve developed critical skills for statistical analysis of language.

During the next six weeks, I will primarily work on designing my model, refining it, and analyzing its conclusions. While I have already learned a lot about the textual properties of fake news, I am excited to see where my research takes me. If my results are interesting enough, I will pivot them into my senior thesis for computer science.